From Loops to Lightning - How Transformers Outran RNNs

Mar 21, 2025

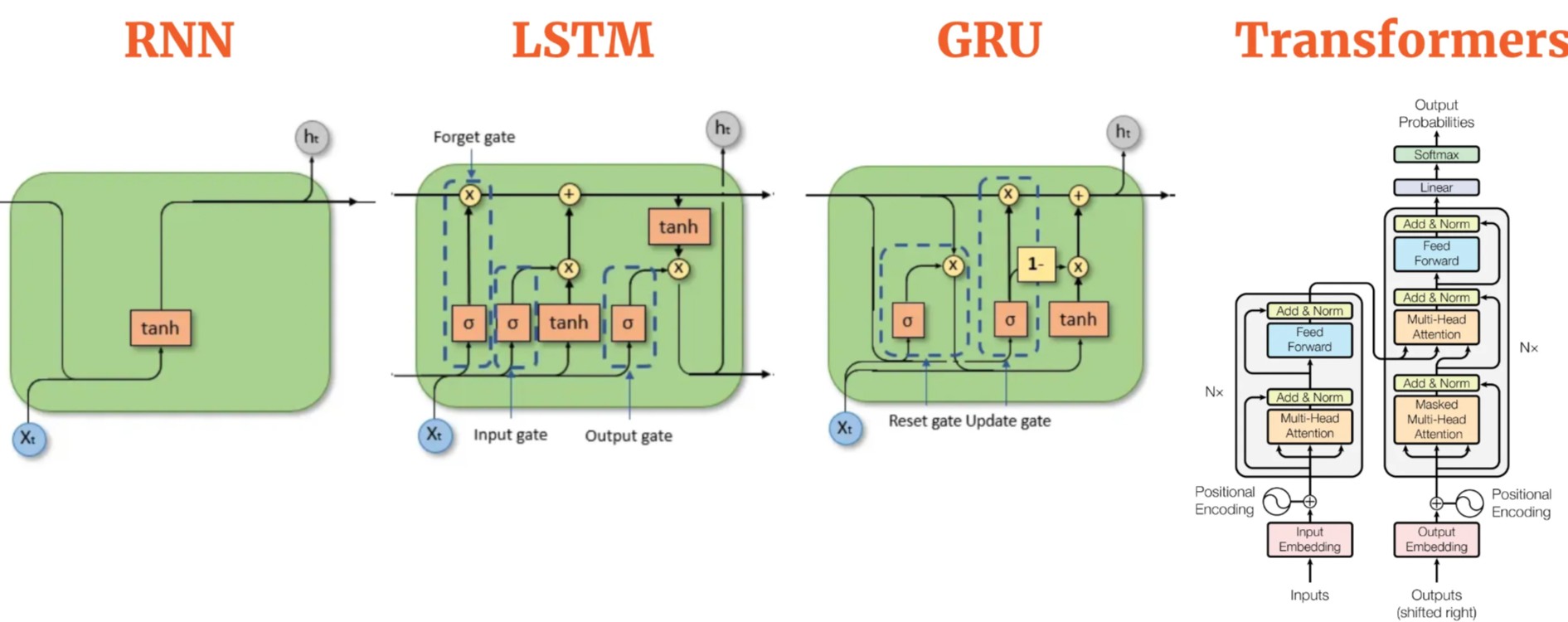

This article gives an in-depth overview of the Transformer architecture, which has revolutionized natural language processing. It focuses on attention blocks, the key component of the model that establishes parallel and contextual connections between words in a sentence.

Recurrent Neural Networks uncovered — The power of memory in deep learning

Mar 12, 2025

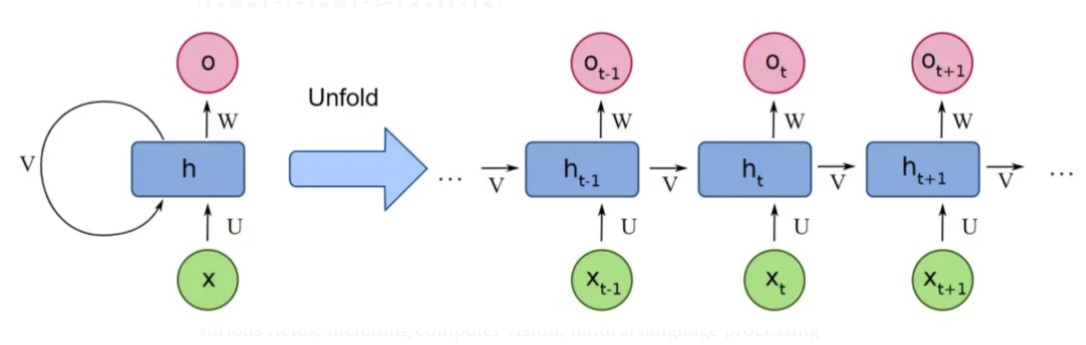

This article talks about how deep learning has transformed various fields, highlighting the strengths and limitations of Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). It explains that RNNs are designed to process sequential data by maintaining memory of previous inputs, making them ideal for tasks like natural language processing and speech recognition. The article also discusses advanced RNN variants like LSTM and GRU, which improve learning of long-term dependencies. Finally, it mentions the evolution toward Transformer models, which have become the new standard for handling complex sequence data efficiently.

Deep Learning basics for video — Convolutional Neural Networks (CNNs) — Part 2

Mar 1, 2025

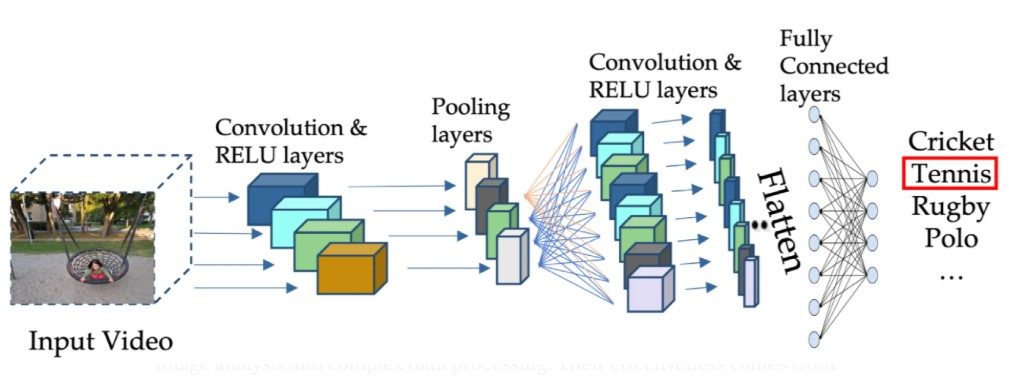

This article explains different activation functions used in neural networks, such as Sigmoid, Tanh, and ReLU, highlighting their advantages and limitations. It describes the vanishing gradient problem, which slows down learning in deep networks due to very small gradients. The article also covers how backpropagation adjusts weights using gradients to improve model predictions. Finally, it explains pooling layers and fully connected layers, essential components in convolutional neural networks for feature reduction and decision making.

Deep Learning basics for video — Convolutional Neural Networks (CNNs) — Part 1

Feb 20, 2025

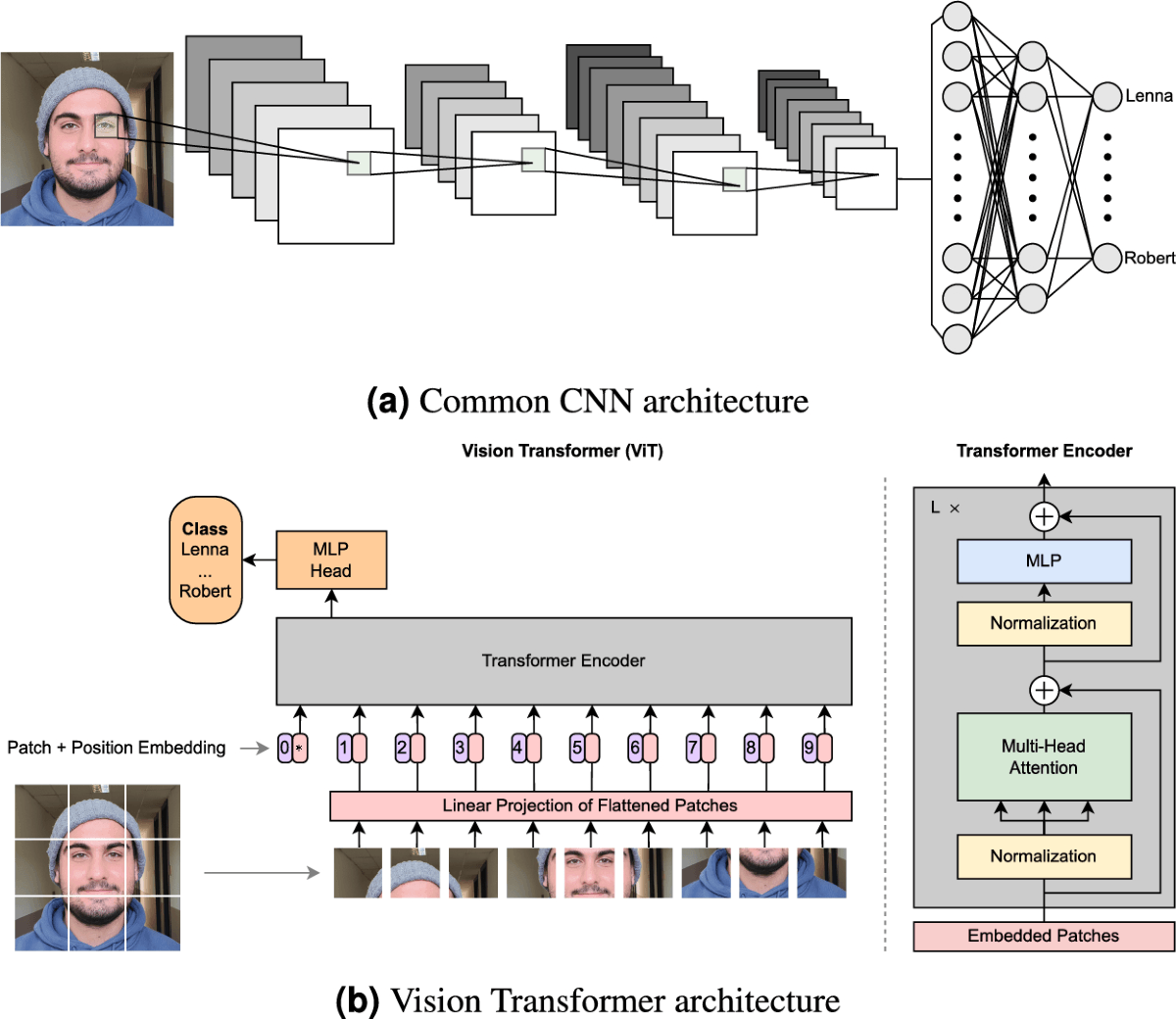

This article talks about the basics of Convolutional Neural Networks (CNNs), a key deep learning technology inspired by the human visual system. It explains how CNNs analyze images by applying filters through convolution to detect features like edges, textures, and shapes. The article also covers the importance of activation layers, which add non-linearity and allow CNNs to learn complex patterns for tasks like object recognition. Future parts will explore different types of activation functions and deeper concepts.

The Rise of Smart Video Processing - From CNNs to Transformers

Feb 18, 2025

This article talks about the rapid growth and importance of video content in today's digital world. It explains how video understanding evolved from analyzing individual frames to using advanced deep learning and Transformer models that capture both spatial and temporal information. These technological advances have enabled more accurate, faster, and smarter video analysis, opening new possibilities in fields like security, healthcare, and autonomous driving. The article also previews future discussions on the specific techniques behind these innovations.

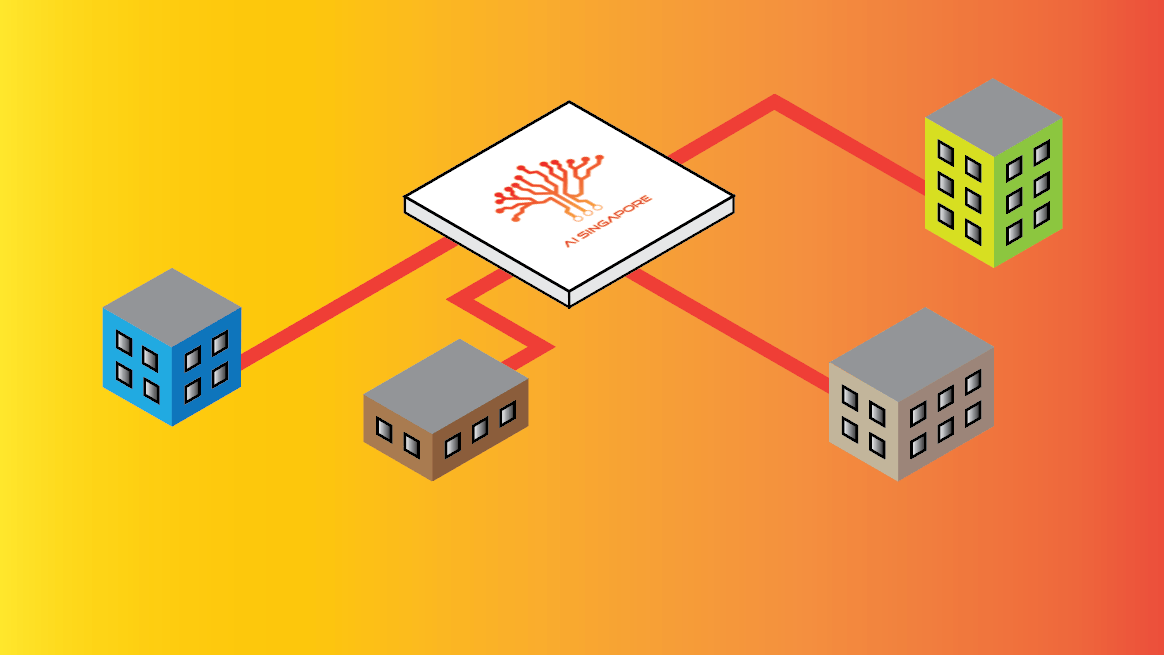

Horizontal Federated Learning

Mar 3, 2024

This article highlights key findings from Horizontal Federated Learning (HFL). It demonstrates that HFL is beneficial for preserving data privacy and is feasible in practice. Furthermore, the article shows that HFL can achieve good results with the appropriate hyperparameters and tools.